Docker-compose部署开源EFK与winlogbeat收集分析Window日志

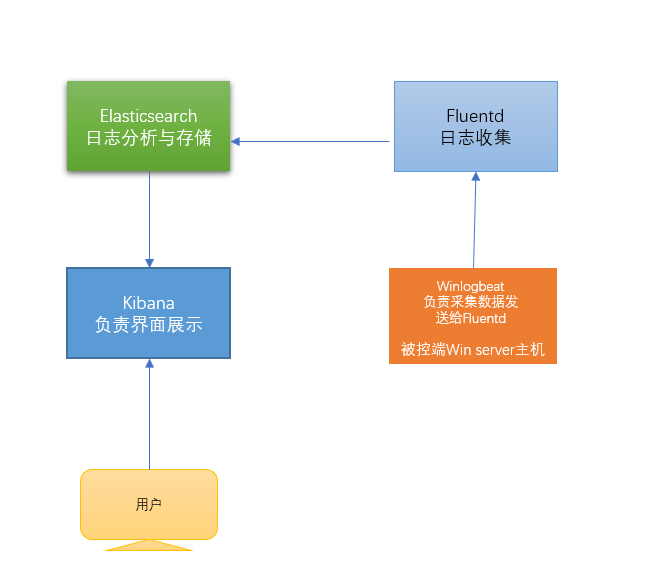

概念以及架构原理

Elasticsearch + Fluentd + Kibana 简称EFK,是一套开源的日志搜索与可视化方案。其中ELasticsearch 负责日志分析和存储,Fluentd 负责日志收集,Kibana 负责界面展示。基本工作原理:Fluentd实时读取日志文件的方式获取日志内容,将日志发送至Elasticsearch,并通过Kibana展示。

环境需求介绍

此处为单点演示,CentOS主机需要预先安装docker与docker-compose

| Host | OS | IP |

|---|---|---|

| Elasticsearch | CentOS 7.8 | 172.16.252.66 |

| Fluentd | CentOS 7.8 | 172.16.252.66 |

| Kibana | CentOS 7.8 | 172.16.252.66 |

| DC (演示被监控) | Windows 2012R2 | 172.16.252.110 |

目录结构准备

# 目录结构

efk

├── data

├── docker-compose.yml

└── fluentd

├── conf

│ └── fluent.conf

└── Dockerfile

# 创建目录结构

sudo mkdir -p /app/efk/{data,fluentd/conf}

# 给data目录777权限,否则Elasticsearch无法启动

sudo chmod 777 /app/efk/data开始部署

# Dockerfile文件

sudo vim /efk/fluentd/Dockerfile

# 写入如下内容

FROM fluent/fluentd:v1.9.1-debian-1.0

User root

RUN gem install fluent-plugin-elasticsearch

User fluent

# 构建fluentd镜像

sudo docker build -t custom-fluentd:latest ./

fluentd 配置

# 编写fluentd配置文件

sudo vim /efk/fluentd/conf/fluent.conf

<source>

@type forward

port 24224

bind 0.0.0.0

</source>

<match *.**>

@type copy

<store>

@type elasticsearch

host elasticsearch

port 9200

logstash_format true

logstash_prefix fluentd

logstash_dateformat %Y%m%d

include_tag_key true

type_name access_log

tag_key @log_name

flush_interval 1s

</store>

<store>

@type stdout

</store>

</match>docker-compose.yml文件编写

version: '2'

services:

nginx:

image: nginx

container_name: nginx

restart: always

ports:

- '8001:80'

links:

- fluentd

logging:

driver: 'fluentd'

options:

fluentd-address: localhost:24224

tag: nginx

fluentd:

image: custom-fluentd

container_name: fluentd

restart: always

volumes:

- ./fluentd/conf:/fluentd/etc

links:

- 'elasticsearch'

ports:

- '24224:24224'

- '24224:24224/udp'

elasticsearch:

image: elasticsearch:7.8.1

container_name: elasticsearch

restart: always

ports:

- '9200:9200'

environment:

- 'discovery.type=single-node'

- 'cluster.name=docker-cluster'

- 'bootstrap.memory_lock=true'

- 'ES_JAVA_OPTS=-Xms1g -Xmx1g' #此处资源限制建议大于等于1g内存,否则elasticsearch容器会启动失败

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./data:/usr/share/elasticsearch/data

kibana:

image: kibana:7.8.1

container_name: kibana

restart: always

links:

- 'elasticsearch'

ports:

- '5601:5601'服务端启动

# 在efk目录通过docker-comopose.yml文件启动

sudo docker-compose up -d密码设置

# Elasticsearch 7.8

# 进入es容器内部

docker exec -it elasticsearch /bin/bash

# 修改es配置文件elasticsearch.yml

vi /usr/share/elasticsearch/config/elasticsearch.yml

# 添加以下内容

xpack.security.enabled: true

xpack.license.self_generated.type: basic

xpack.security.transport.ssl.enabled: true

# 保存后退出docker容器

exit

# 重启elasticsearch

docker restart elasticsearch

# 重启后进入es容器中

docker exec -it elasticsearch /bin/bash

# 进入es 名录目录

cd /usr/share/elastic/bin

# 执行命令,交互式设置密码(注意保存好全部密码)

./elasticsearch-setup-passwords interactive

# Kibana 7.8

#进入Kibana容器内部

docker exec -it kibana /bin/bash

# 修改kibana配置文件kibana.yml

vi /usr/share/kibana/config/kibana.yml

#添加以下内容

i8n.locale: "zh-CN" # 修改页面显示语言为中文简体(按喜好修改)

elasticsearch.username: "kibana"

elasticsearch.password: "passwd1!"

# 保存后退出docker容器

exit

# 重启kibana

docker restart kibana

# 容器启动后通过浏览器https://172.16.252.66:5601可进入前端查看页面Windows winlogbeat安装

下载Winlogbeat

地址:https://www.elastic.co/downloads/beats/winlogbeat

# 编辑winlogbeat.yml

... ....

setup.kibana:

# Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

host: "172.168.252.66:5601" # 修改为Kibana服务端ip与端口

... ...

# ---------------------------- Elasticsearch Output ----------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["172.16.252.66:9200"] # 修改elasticsearch 服务端地址与端口

# Protocol - either `http` (default) or `https`.

#protocol: "https"

# Authentication credentials - either API key or username/password.

#api_key: "id:api_key"

username: "elastic" # 如有配置用户,添加用户

password: "passwd1!" # 如有配置密码,添加密码

... ...

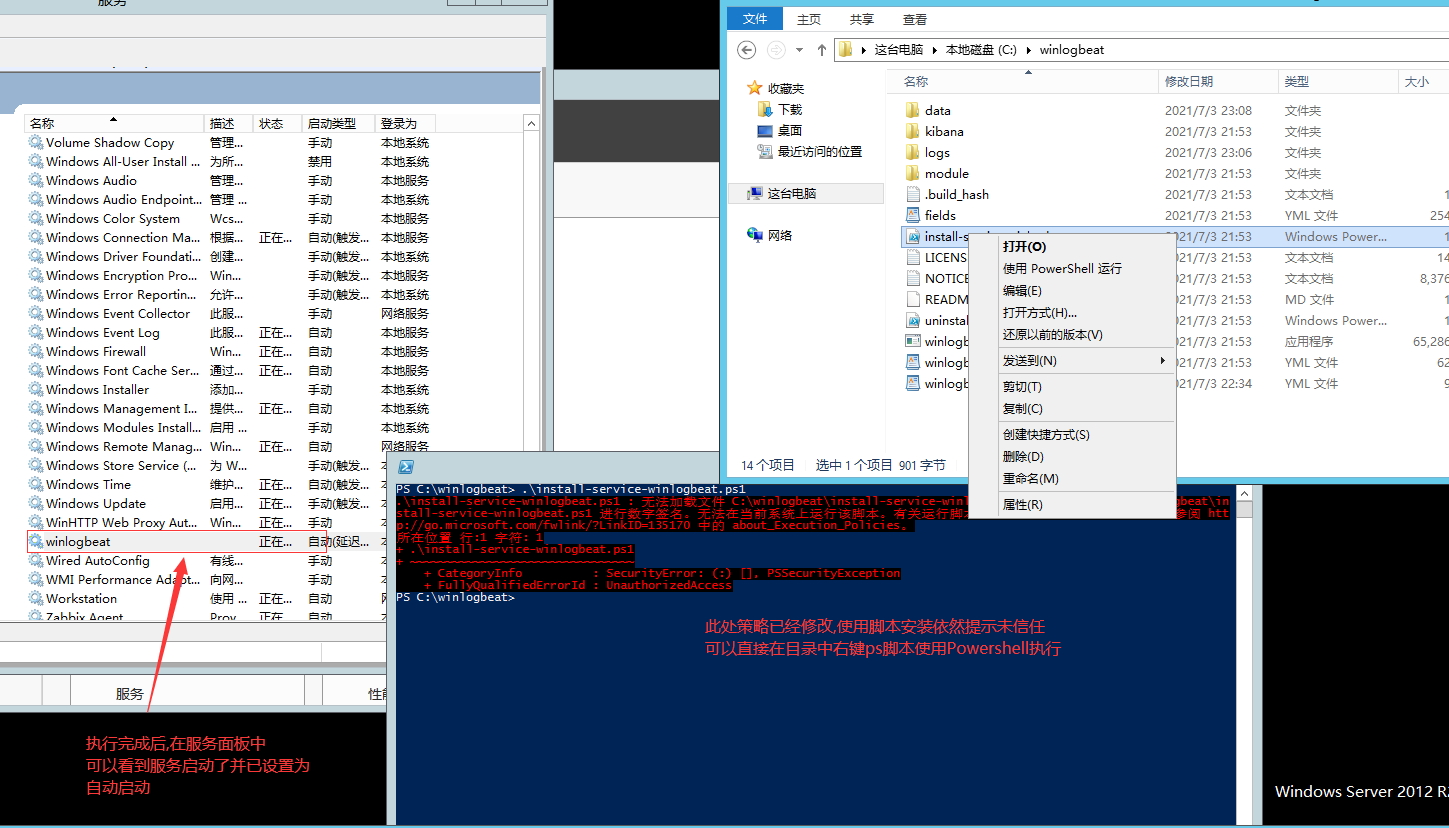

Windows 启用winlogbeat

# 将下载的winlogbeat解压到需要查看日志的服务端,使用powershell 管理员模式进入目录执行

.\install-service-winlogbeat.ps1 # 执行服务安装脚本,如提示脚本未进行

set-ExecutionPolicy RemoteSigned # 提示未进行签名验证,关掉验证重启服务器再执行安装脚本

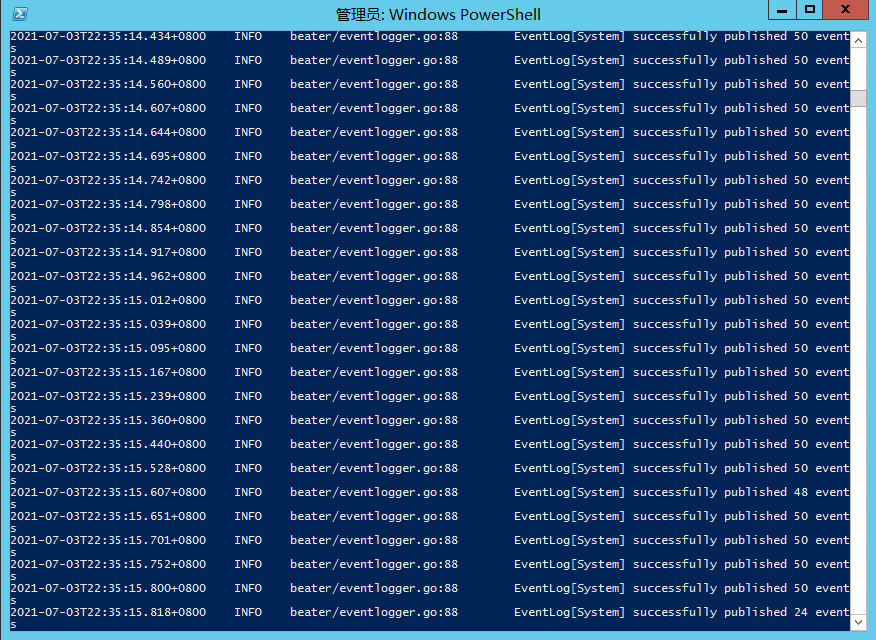

./winlogbeat -c winlogbeat.yml -e # 测试查看

./winlogbeat setup -e # 加载 Kibana 仪表板

Start-Service winlogbeat # 启动服务winlogbeat服务安装

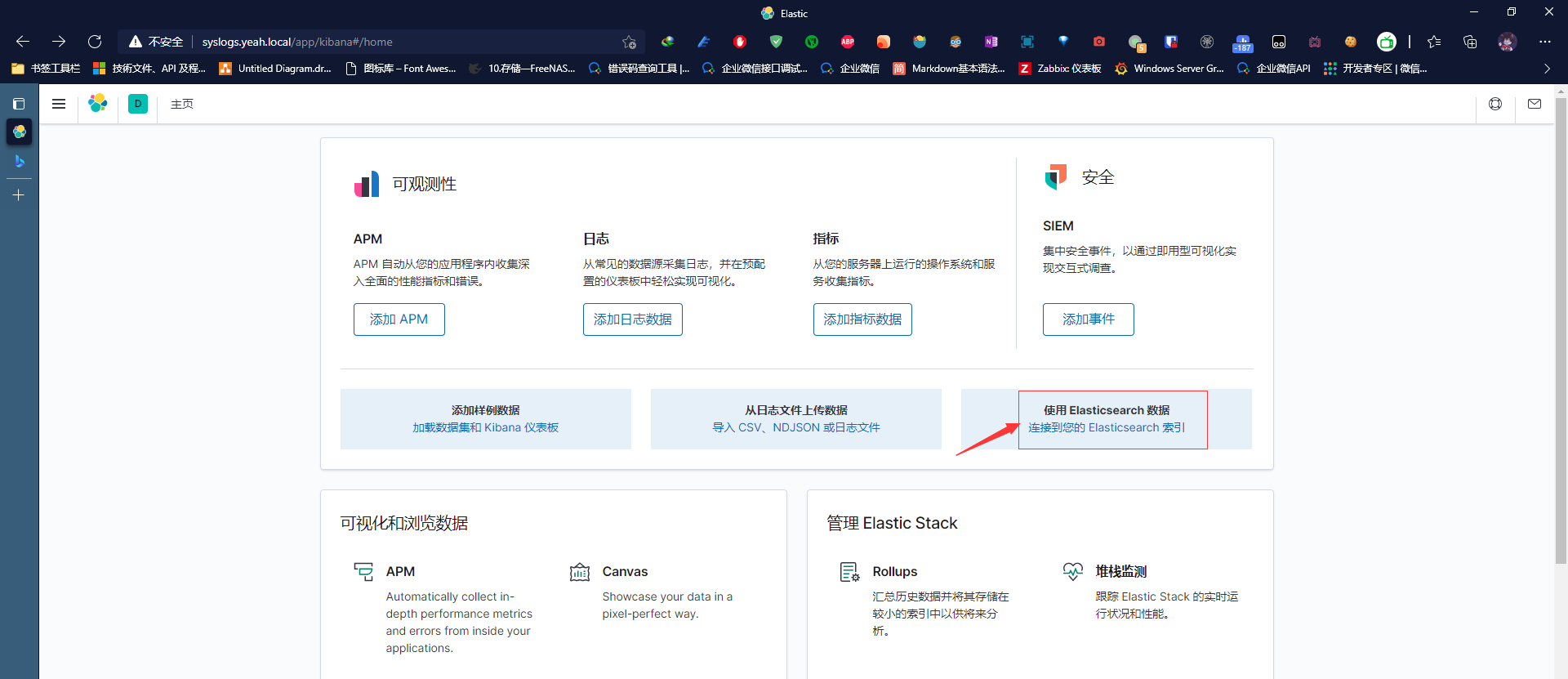

Kibana前端配置

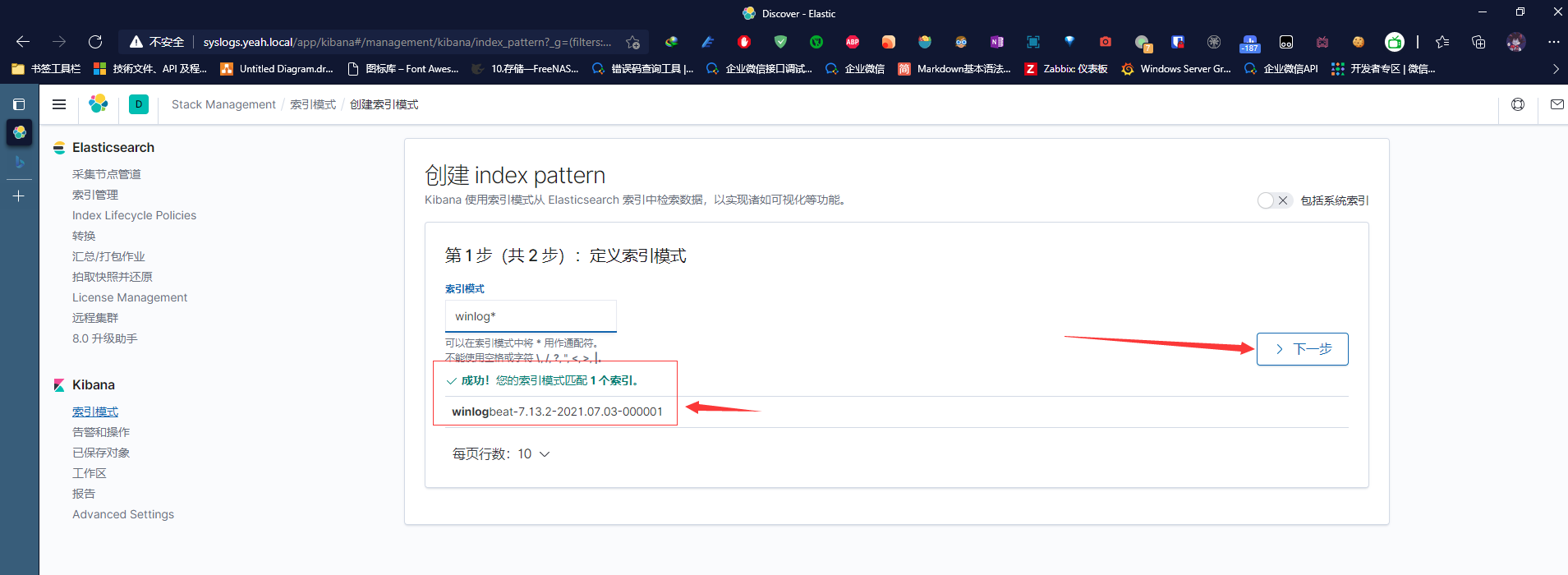

新增日志索引

添加winlogbeat索引

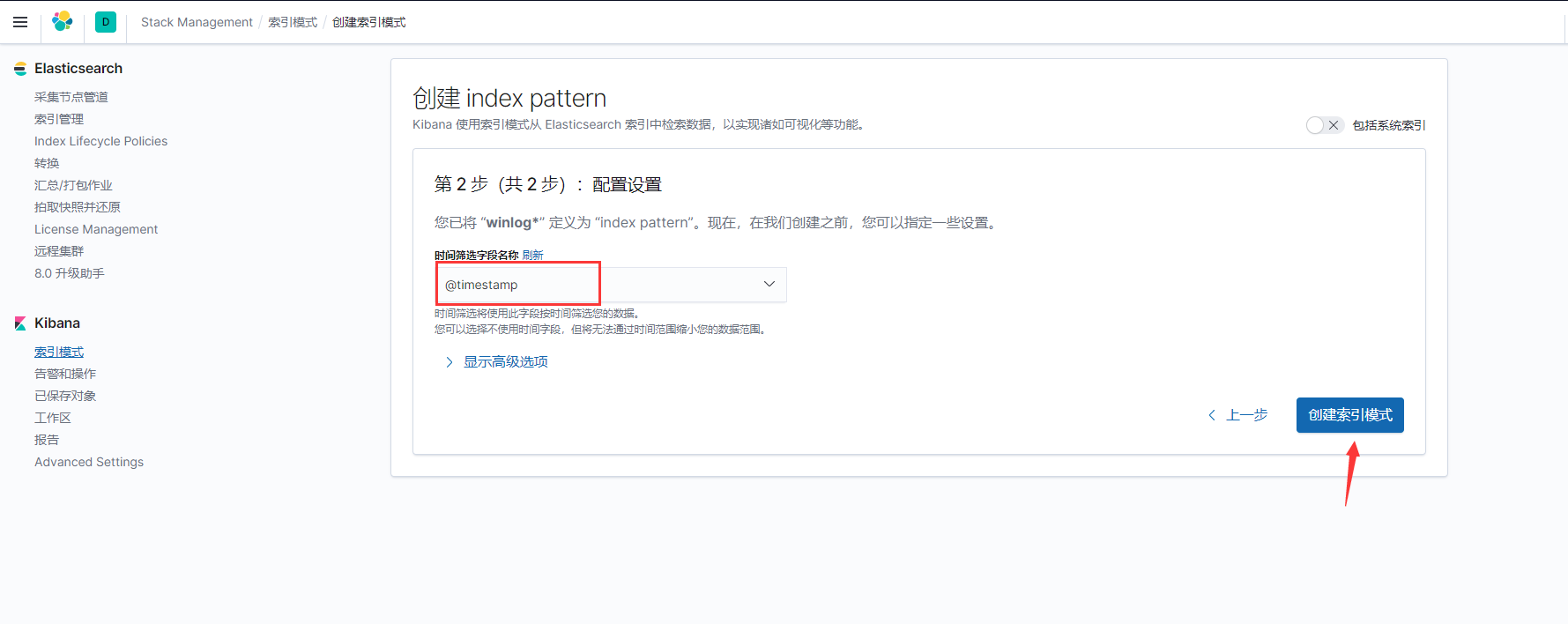

按时间戳创建winlogbeat索引

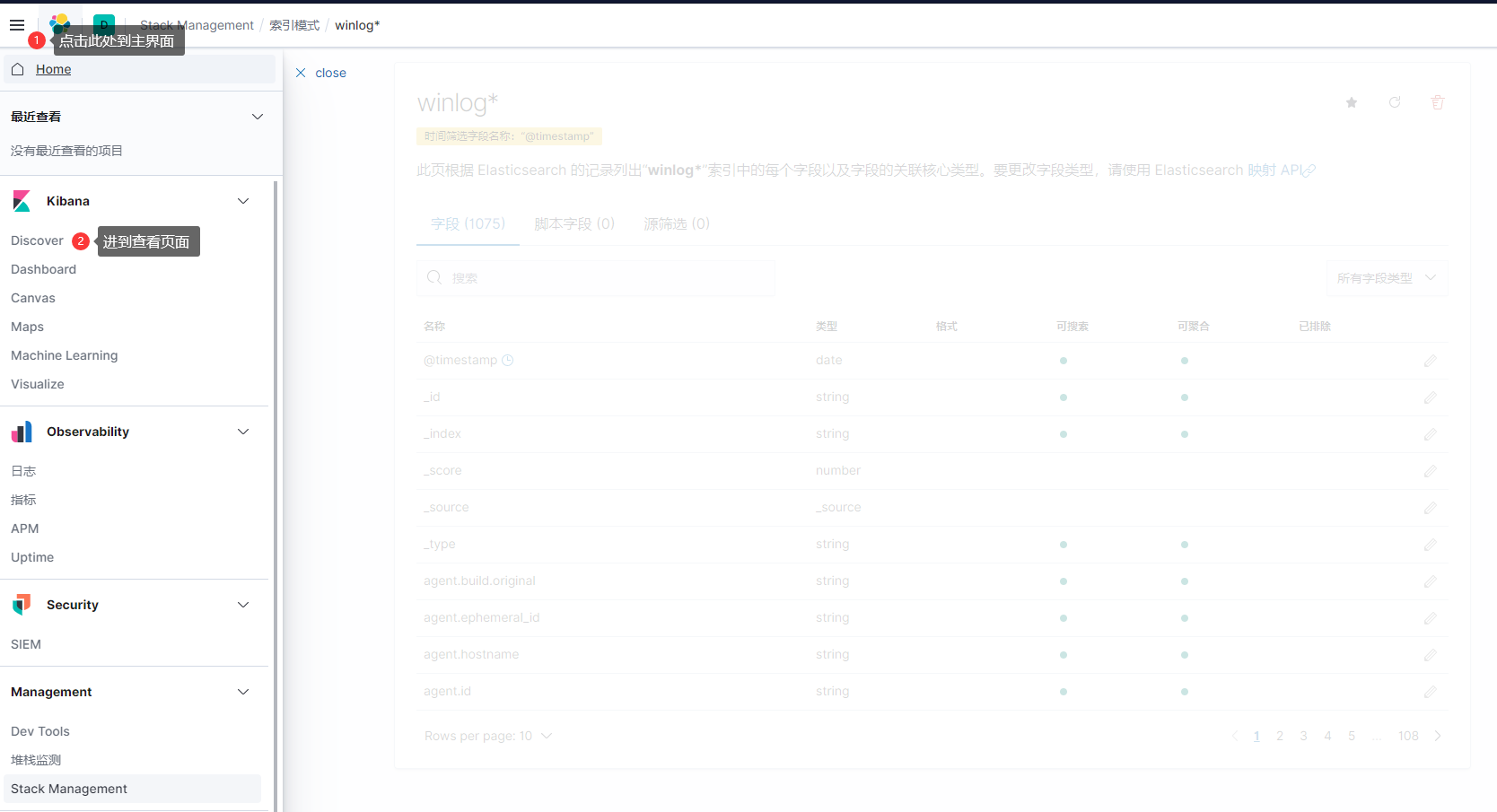

确认索引内容

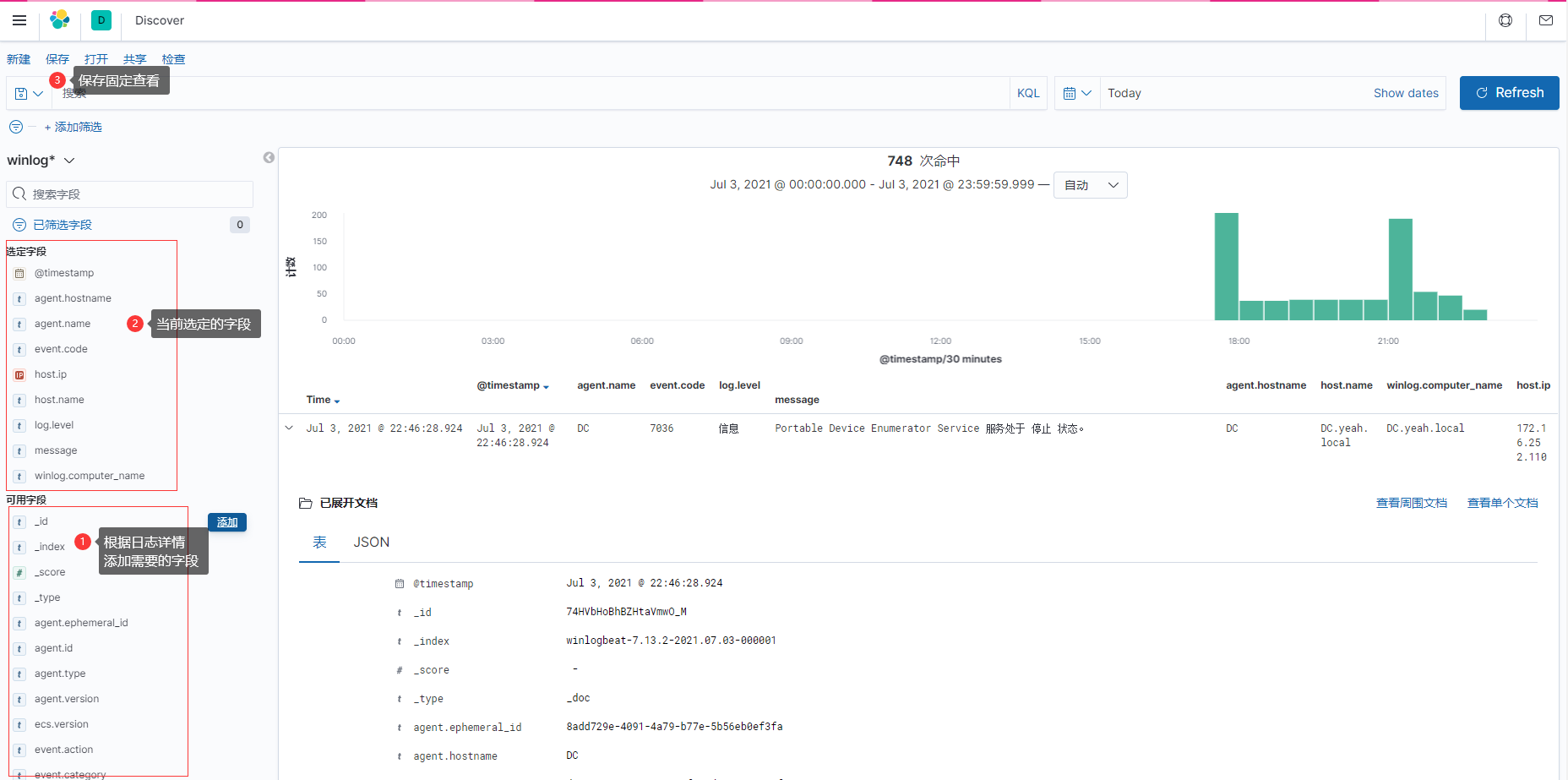

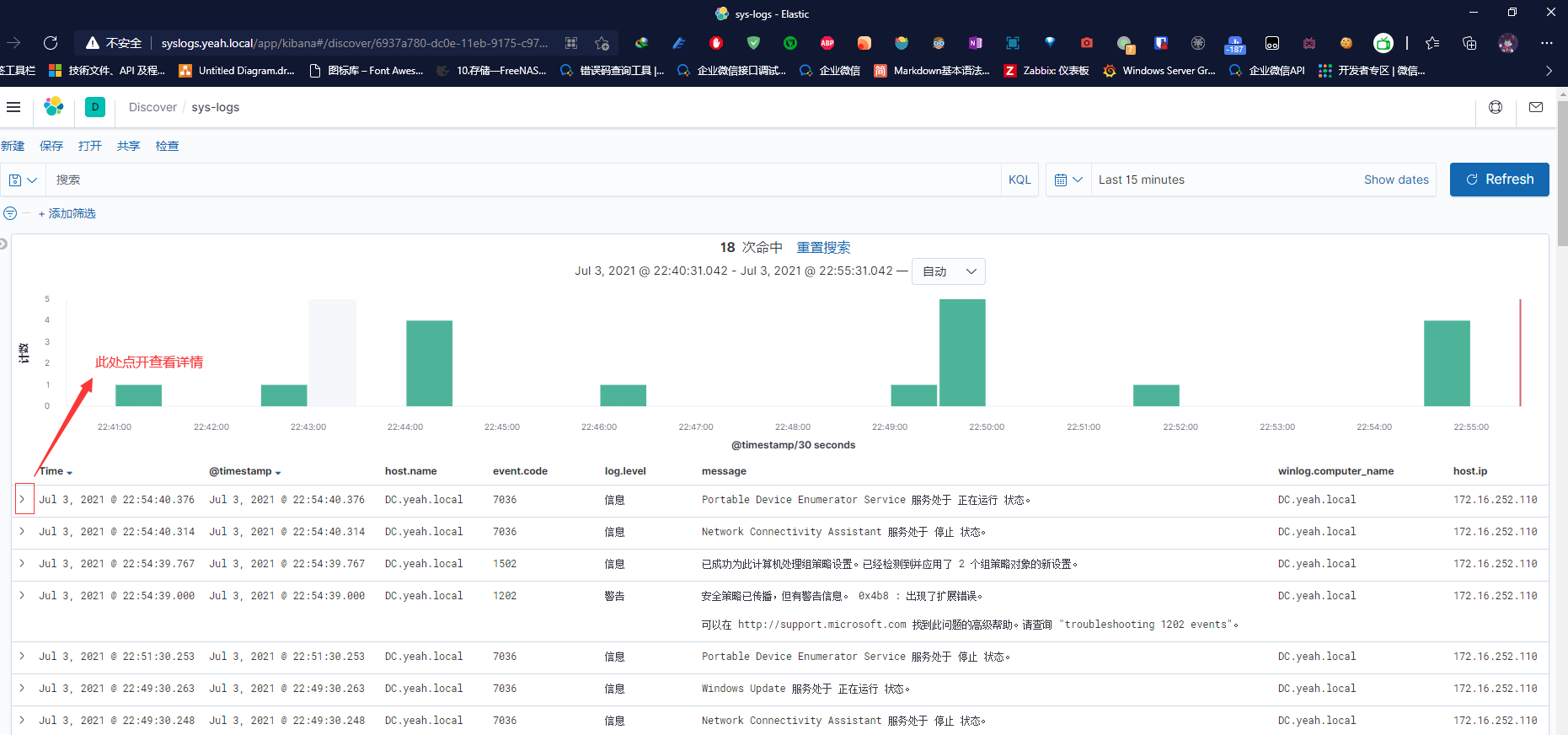

查看索引中日志

按自己喜好筛选显示字段

查看日志

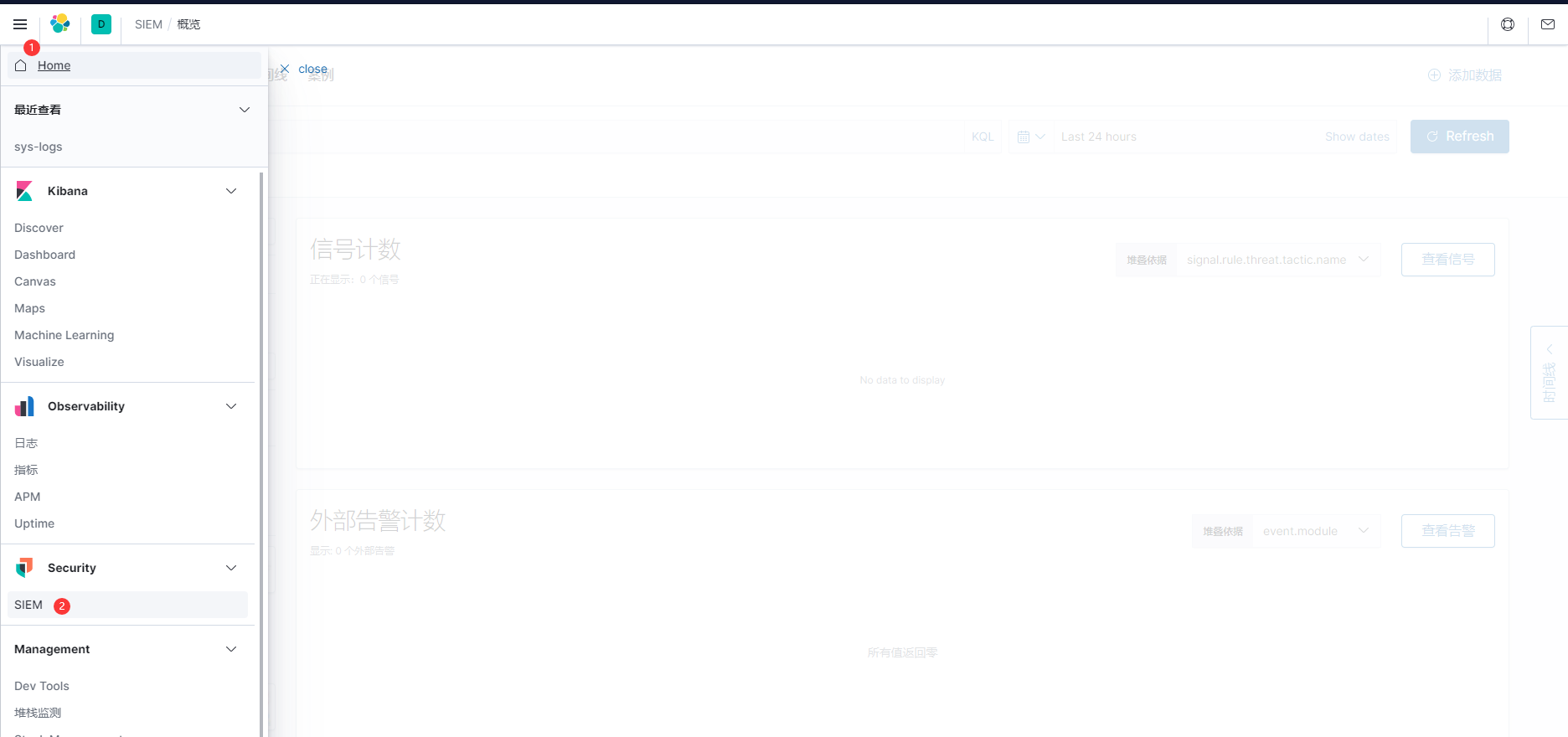

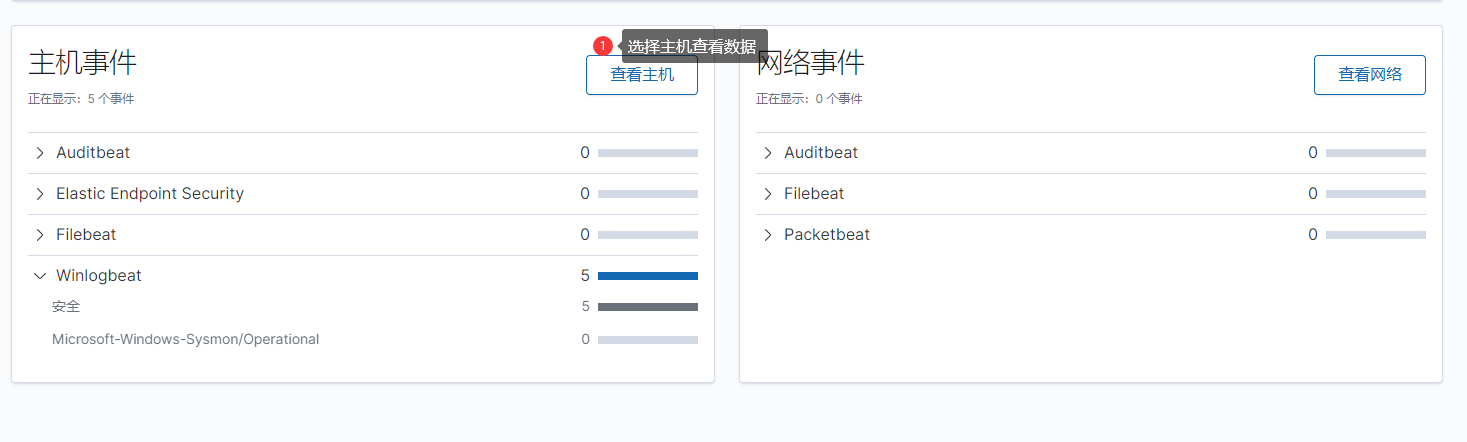

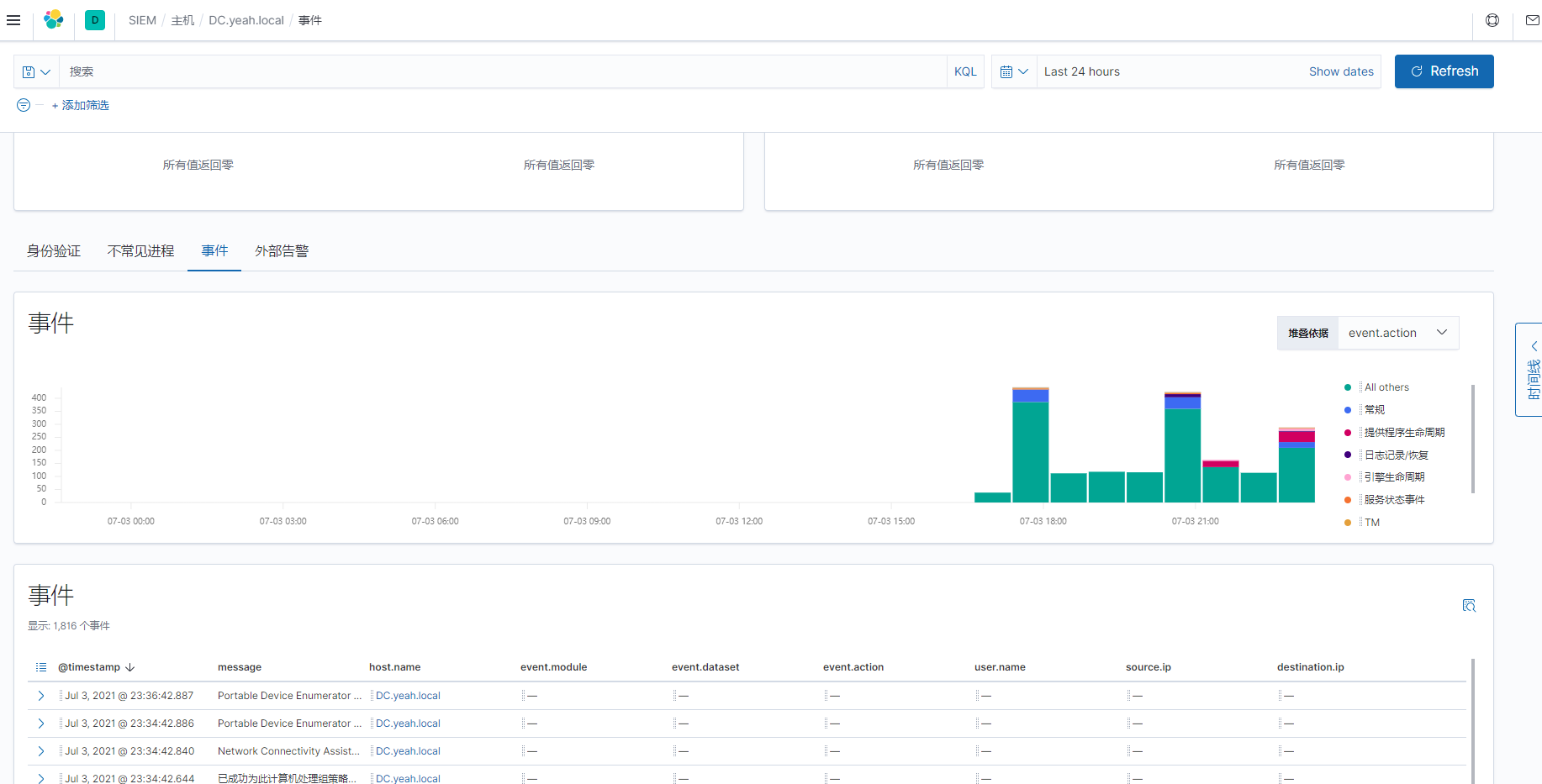

按主机查看事件与统计

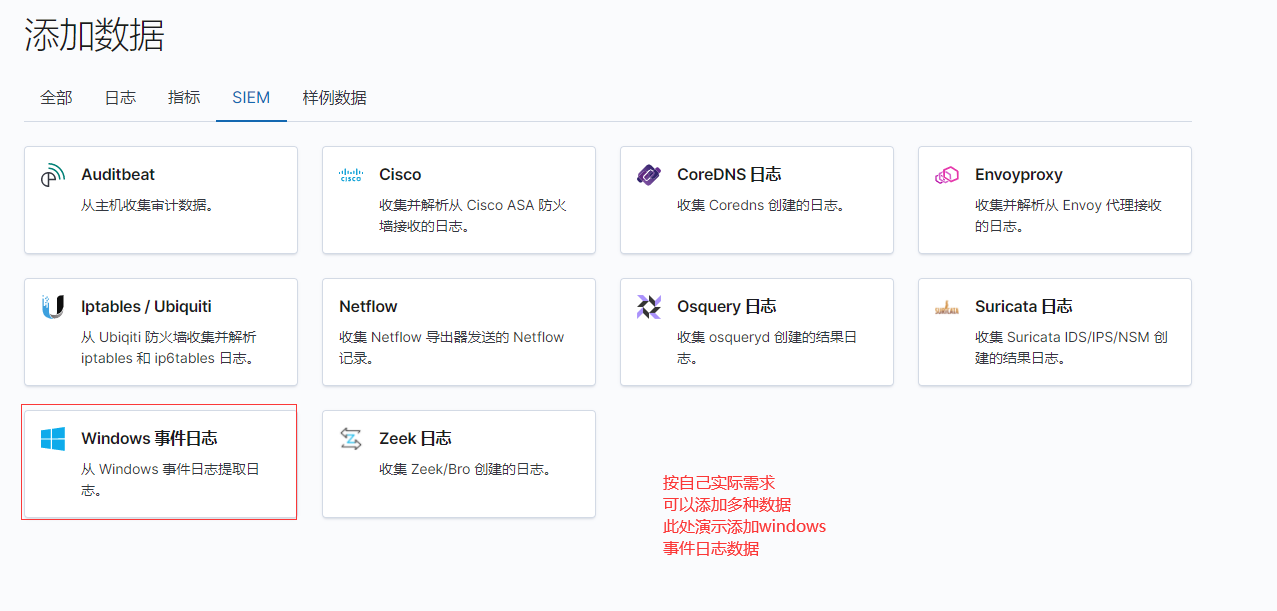

数据类型选择

检查并应用数据

选择主机并查看数据

根据喜好查看自己关心的数据类型

EFK使用winlogbeat监控windows搭建以及基础操作基本完工,另可以在运行EFK容器的主机上跑一个Nginx做代理,一般企业环境自建DNS,可以新建一个A记录与CNAME别名解析记录,内网域名或公网域名访问,根据Nginx代理转发到Kibana容器上,这样在内部或者外部就不需要通过ip:端口的方式访问,直接使用域名访问,比较容易记,关于EFK其他功能操作后续摸索即可。